Use Ti-dumpling + Ti-binlog to synchronize TiDB data to Klustron

Use Ti-dumpling + Ti-binlog to synchronize TiDB data to Klustron

This article describes how to synchronize the full amount of data from a running TiDB cluster to the Klustron cluster, and then continuously update the streaming data to the Klustron cluster.

This solution is applicable to TiDB whose upstream cluster version is below 5.0 This solution is applicable to tidb whose upstream cluster version is below 5.0

tidb-dumpling

install dumping

./bin/tiup install dumpling:v4.0.16Use dumpling to export data

./components/dumpling/v4.0.16/dumpling -u root -proot -P 40002 -h 192.168.0.132 -o /nvme2/compare/tidb/dumpling_data/ -B tpcc-BSelect the specified database dump- The file after -o dump will be placed in the specified location

ddl2kunlun-linux

This program is to convert the table definition of the mysql database into a table definition that can be used for Klustron wget http://downloads.Klustron.com/kunlun-utils/1.1.1/ddl2kunlun-linux

Example of use

./ddl2kunlun-linux -host="172.0.0.132" -port="40002" -user="root" -password="root" \ -sourceType="mysql" -database="tpcc" -table="tablename" > a.sql-host/// Information-portof the database being exported-user``-password-sourceTypeThe database type of the exported database, default mysql-database/-tableThe database and table name to be exported- You can view the help documentation through ./ddl2kunlun-linux --help

Then write the generated a.sql to the Klustron cluster

psql -h 192.168.0.132 -p 30001 -U abc -d postgres < a.sql

mysql -h 192.168.0.132 -P 30002 -uabc -pabc postgres < a.sql

Both of the above are possible

The database of tidb is mapped to Klustron, which is the schema under the postgres database, so we can use postgres for the database on the command line

Then create a schema named tpcc under the postgres database of the KunlnBase cluster to map the tidb database named tpcc

Some sql statements in the generated table definition

[kunlun@kunlun-test8 .tiup]$ head -5 a.sql CREATE TABLE tpcc.warehouse ( w_id bigint not null, w_name varchar(10) , w_street_1 varchar(20) , w_street_2 varchar(20) ,Use a for loop script to run

Because the tool can only export one table at a time, it will be more frequent

You can refer to the following script to automatically import the converted table definition into Klustron

echo `show databases;` > test.sql for i in `mysql -h 172.0.0.132 -uroot -proot -P40002 tpcc < test.sql | grep -v Tables_in_` do ./ddl2kunlun-linux -host="172.0.0.132" -port="40002" -user="root" -password="root" -sourceType="mysql" -database="tpcc" -table="$i" > a.sql echo create table $i psql -h 172.0.0.132 -p 35001 -U abc -d postgres < a.sql done

The reason why the postgres database is used here is because the tidb ( mysql ) database is mapped to the postgres schema in Klustron

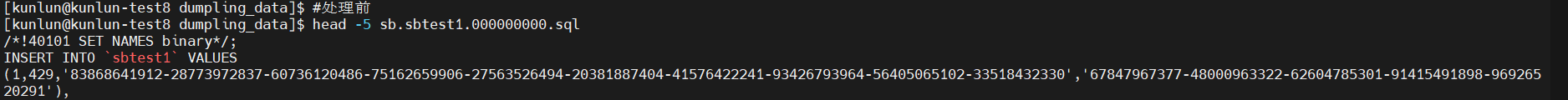

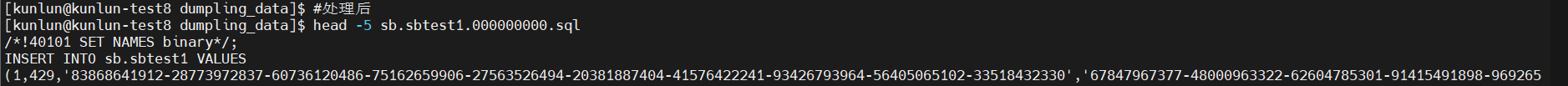

Modify the sql generated by dumpling

In the sql generated by dumping, the insert table does not have a schema (corresponding to the mysql database), so we need to add the schema (corresponding to the mysql database) in the file

It is not recommended to manually add one by one to the file by yourself, because dumping will generate a lot of INSERT INTO statements in the face of a table with a large amount of data

Note that we don’t care about the table definition file generated by dumping. The table definition has been completed with ddl2kunlun-linux earlier (that is, the file whose file name structure is db.table-schema.sql)

The metadata file is only used later, so it doesn’t matter here

for i in `ls /nvme2/compare/tidb/dumpling_data | grep -v schema | grep sql` do cd /nvme2/compare/tidb/dumpling_data/ table=`echo $i | awk -F. '{print $2}'` db=`echo $i | awk -F. '{print $1}'` sed -i "s/\`$table\`/${db}.$table/" $i sed -i 's/0000-00-00/1970-01-01/' $i cd ~/.tiup done

Write the sql file generated by dumping to Klustron

You can use mysql or pg to irrigate, depending on your preference

psql -h 192.168.0.132 -p 30001 -U abc -d tpcc < tpcc.customer.000000000.sqlmysql -h 192.168.0.132 -P 30002 -uabc -pabc tpcc < tpcc.customer.000000000.sqlThe current test volume is 100 warehouses

If you want to go into Klustron to view the data, you need to switch the schema

- set search_path to schema

Start TiDB-drainer to synchronize data flow to Klustron

For details, refer to the official TiDB tutorial

Install the pump and drainer tools

cd ~/.tiup./bin/tiup install pump:v4.0.16./bin/tiup install drainer:v4.0.16- To modify

pump.tomlanddrainer.tomlthese two configuration files, you can refer to the template on the official website

start up

cd ~/.tiup ./components/drainer/v4.0.16/drainer -config drainer.toml -initial-commit-ts {initial-commit-ts} &

TiDB-binlogctl

During the running process, you can use binlogctl to monitor the pump and drainer

Download method: wget https://download.pingcap.org/tidb-community-server-v4.0.16-linux-amd64.tar.gz

- After decompression, cd into the offline package

- binlogctl is under ctl-v4.0.16-linux-amd64.tar.gz and needs to be decompressed

Command to view pump status

./binlogctl -pd-urls=http://192.168.0.132:2379 -cmd drainers

[2022/10/21 17:11:42.513 +08:00] [INFO] [nodes.go:53] ["query node"] [type=drainer] [node="{NodeID: kunlun-test8:8321, Addr: 192.168.0.132:8321,

State: paused, MaxCommitTS: 436819730621530117, UpdateTime: 2022-10-21 14:52:11 +0800 CST}"]

Command to view drainer status

./binlogctl -pd-urls=http://192.168.0.132:2379 -cmd pumps

[2022/10/21 17:18:25.972 +08:00] [INFO] [nodes.go:53] ["query node"] [type=pump] [node="{NodeID: 192.168.0.132:8912, Addr: 192.168.0.132:8912,

State: online, MaxCommitTS: 436822038851878913, UpdateTime: 2022-10-21 17:17:19 +0800 CST}"]

[2022/10/21 17:18:25.972 +08:00] [INFO] [nodes.go:53] ["query node"] [type=pump] [node="{NodeID: 192.168.0.134:8912, Addr: 192.168.0.134:8912,

State: online, MaxCommitTS: 436822039271309320, UpdateTime: 2022-10-21 17:17:20 +0800 CST}"]

[2022/10/21 17:18:25.972 +08:00] [INFO] [nodes.go:53] ["query node"] [type=pump] [node="{NodeID: 192.168.0.136:8912, Addr: 192.168.0.136:8912,

State: online, MaxCommitTS: 436822039205773313, UpdateTime: 2022-10-21 17:17:20 +0800 CST}"]

- For other binlogctl commands, see the binlogctl documentation